- Neon Bees

- Posts

- Three Goats and a Dog

Three Goats and a Dog

OpenAI's Chat, Alexa Upgrade, Meta's Touch AI, & OpenAI Roadblock

Rise and Shine. When Massachusetts firefighters spotted a mother goat, her two tiny hoofed hooligans, and their dog buddy casually strutting past the station, they knew their day was about to get a lot weirder. With the help of a neighbor—who just so happened to be walking his own dog and seemed oddly prepared for a goat-leashing operation—they coaxed the bewildered mama goat inside, with her adorable “kid” entourage bouncing along like little popcorn kernels. The firefighters, clearly untrained in goat management, set up a “VIP pen” out back for their surprise guests, all while the dog looked on, probably wondering why his pals didn’t get the memo about the leash. By the end, the firefighters learned that not all rescues involve flames—sometimes, it’s just about giving a wandering goat family their moment of fame.

Top Stories

OpenAI’s Got a New Search Game in Town

OpenAI

The days of typing out precise Google searches might be winding down. OpenAI has just launched ChatGPT Search, a new feature within ChatGPT designed to fetch fast, detailed answers from across the web. Built on a fine-tuned GPT-4o model, ChatGPT Search delivers info on sports, stocks, news, and more, complete with photos and links. Whether it’s weekend plans or live election results, ChatGPT Search pulls data from sources like AP and Reuters.

But OpenAI’s approach isn’t just about answers; it’s about keeping the conversation going. Each response shows in-line and sidebar attributions, making it easy to ask follow-up questions. Users can also click the web search icon to manually guide the search. It’s a conversational search engine that knows where it got its info and shares it.

ChatGPT Plus and Team users get first access to ChatGPT Search on mobile and web, with enterprise and education accounts up next. Free users can expect access soon, and OpenAI has also released a Chrome extension to make ChatGPT Search the default search engine.

Not everyone’s thrilled, though. Publishers are concerned that AI summaries like ChatGPT Search might pull traffic from original sites, with studies suggesting a possible 25% dip in publisher visits. OpenAI says it’s listening, refining how it chooses articles, summaries, and quotes based on partner feedback. Future plans include adding ChatGPT Search to its Advanced Voice Mode and expanding into shopping and travel.

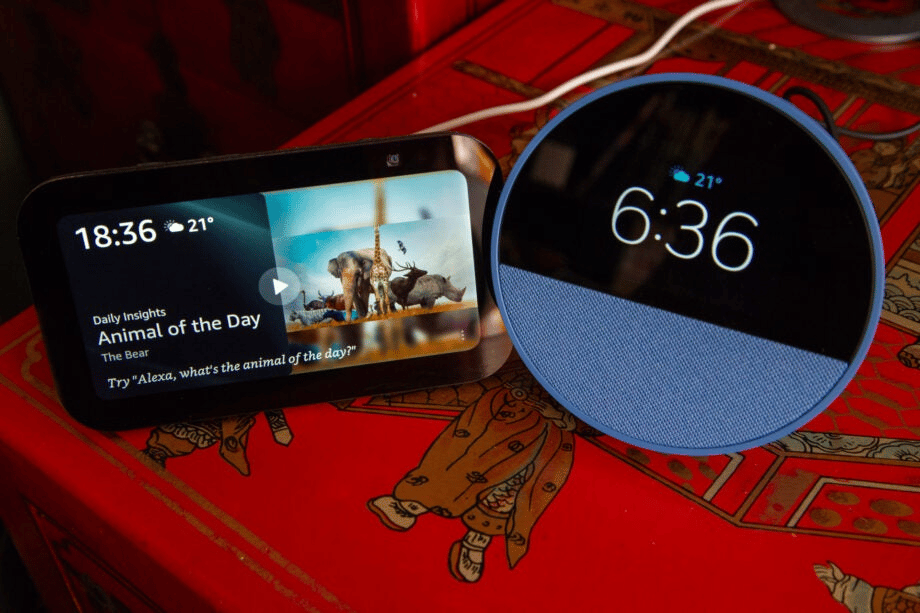

Alexa Levels Up with New Powers

Amazon / Alexa

Amazon’s Alexa is gearing up for a serious upgrade, with CEO Andy Jassy teasing a more "agentic" version of the voice assistant that could handle tasks on your behalf. No longer just answering questions or pulling up the weather, this new Alexa would be able to take real actions, potentially making life a whole lot easier (or at least hands-free). “You can imagine us being pretty good at that with Alexa,” Jassy said confidently during Amazon’s Q3 earnings call, hinting at the potential of a next-gen assistant powered by advanced generative AI.

Autonomous Actions: The upcoming Alexa will be able to act autonomously on users’ behalf, marking a shift from just answering questions.

New Foundation Models: Amazon is re-architecting Alexa with foundation models from Anthropic, an AI partner Amazon invested in heavily.

The tech behind this revamped Alexa is still in development, though it’s not without challenges. During testing, the upgraded assistant reportedly struggled with basic tasks like turning on smart lights, taking up to six seconds to respond. Internally called “Remarkable Alexa,” it’s rumored to launch as a subscription-based service costing $5 to $10 monthly, with a more basic free option still available.

Delayed Release: Though reports initially suggested a late 2024 launch, Bloomberg now indicates the timeline has slipped into 2025.

Despite being in over a half billion homes worldwide, Alexa hasn’t turned into the profit machine Amazon hoped for. The company’s devices division has reportedly lost tens of billions since 2017, making Alexa more of a household name than a revenue driver. Whether this ambitious new Alexa will change the game remains to be seen, but Amazon seems committed to seeing if it can finally make an impact.

Meta Teams Up to Bring Touch to AI

Meta

Meta’s taking a big step into the tactile world, announcing a partnership with sensor company GelSight and South Korea’s Wonik Robotics to push the boundaries of touch for AI. These aren’t your average gadgets, though—they’re high-tech sensors made for scientists and researchers, aimed at helping AI understand the physical world in greater depth. Meta envisions this as a leap forward in developing AI that doesn’t just observe the world but feels it.

Partnered with GelSight: Working on the Digit 360, a new tactile fingertip sensor with “human-level” sensing.

Teaming with Wonik Robotics: Developing an upgraded Allegro Hand, a robot hand integrated with tactile sensors.

GelSight’s Digit 360 is an impressive little device, designed as a “tactile fingertip” with an AI chip onboard and 18 different sensing features. Meta says this device goes beyond just touch, using sensors to capture texture, temperature, and even scent. It’s like giving robots a hyper-sensitive, human-like sense of touch, with each interaction creating a unique profile based on surface properties.

On the robotic side, Meta’s collaboration with Wonik will bring the Allegro Hand, a robot hand with tactile sensors akin to the Digit 360. The upcoming version will feature new control boards, encoding sensor data onto a host computer to allow for richer and more detailed interpretations of the environment. Together, these upgrades should make the Allegro Hand one of the most “sensitive” hands on the market.

Both Digit 360 and the Allegro Hand are expected to be available for purchase next year, and Meta’s even opened up proposals for researchers who want early access. By blending tactile perception with AI, Meta’s pushing the boundaries on how machines interact with the world, hoping these advances will lead to AI that understands, models, and ultimately learns from its environment.

OpenAI Hits Roadblocks on the Path to New Releases

Neon Bees

OpenAI CEO Sam Altman got real in a Reddit AMA, sharing that compute power shortages are slowing the company down. “All of these models have gotten quite complex,” he said, adding that OpenAI faces tough choices on how to allocate resources. Reports suggest OpenAI’s been working with Broadcom on a custom AI chip, expected in 2026, to tackle these issues.

One project impacted by limited compute is ChatGPT’s Advanced Voice Mode. The April demo featured the AI responding to visual cues, but Altman said it’s still far off. Interestingly, the demo was rushed to compete with Google’s I/O event, and some within OpenAI felt it wasn’t ready. Even the voice-only mode took longer than expected to launch.

Meanwhile, OpenAI’s next DALL-E update is without a release date, and its video tool, Sora, faces delays due to performance and safety tweaks. Rivals like Luma and Runway are making progress while Sora reportedly lags with slow processing times. To add to the challenge, Sora’s co-lead recently left for Google.

Altman also outlined other priorities, like enhancing the o1 series of reasoning models, which got a sneak peek at DevDay. And while GPT-5 isn’t coming soon, Altman promised “some very good releases” later this year. He also hinted that OpenAI might allow NSFW content in ChatGPT someday, saying, “we totally believe in treating adult users like adults.”

Gif of the day

giphy

More Interesting Reads…

Insight of the day…

“One machine can do the work of fifty ordinary men. No machine can do the work of one extraordinary man.”